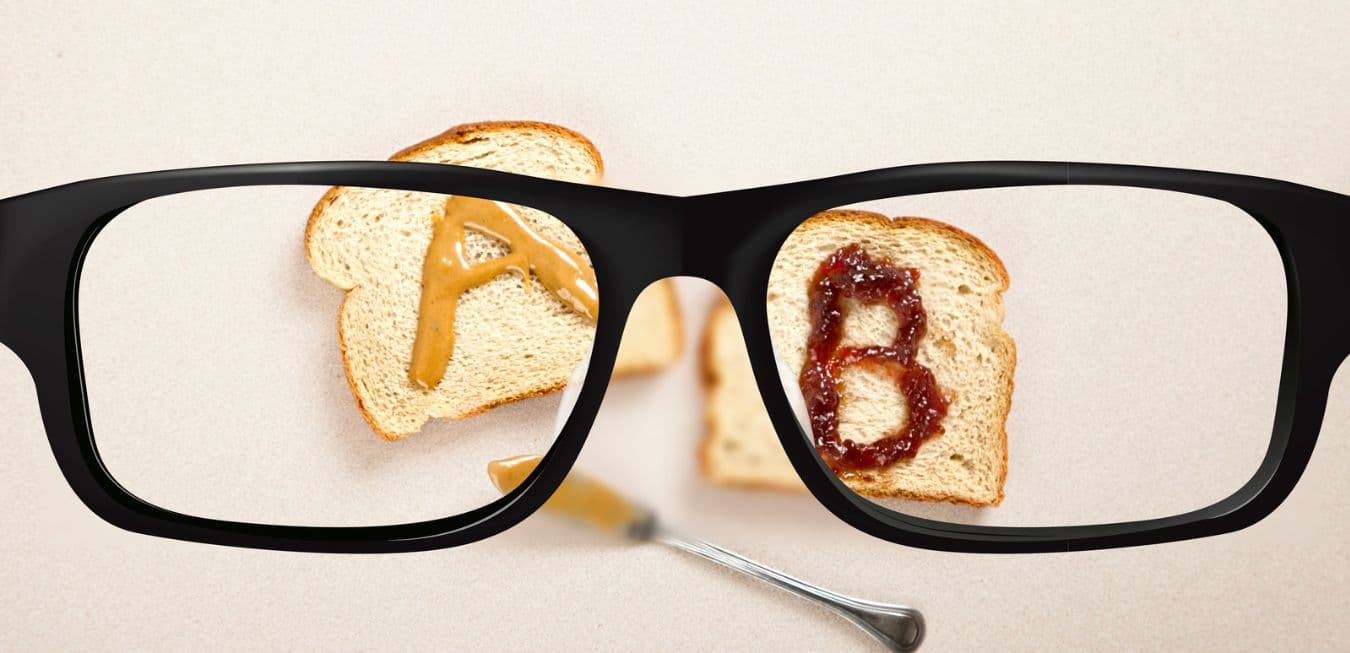

What is A/B Testing?

A/B Testing, also known as Split Testing, is a technique used to compare two or more versions of a web page or app. The end goal is to determine which version performs better. These two versions are called the "treatment" and "control." Visitors are randomly shown either version A or B, and their behavior is tracked. After the experiment has run for the set time, the performance data for both versions is gathered and examined. This is to decide which page achieved better key metrics, such as engagement and conversion rate.

A/B Testing enhances content, features, and designs on a website or app. This allows organizations to make decisions based on data rather than solely relying on intuition.

Benefits of A/B Testing

A/B Testing is an excellent tool for marketers. It helps them upgrade their websites and content by checking multiple versions and allows them to compare various web page versions, email campaigns, or UI designs. This helps them understand which works best for visitors and customers. It leads to better user experience, engagement, and conversion rates.

A/B Testing has a lot of advantages. Marketers can make changes based on actual data. They don't have to guess what direction their website should take. The test results help them make adjustments that benefit customers.

It also gives insight into visitor behavior and audience segmentation. Marketers can find out which elements are performing best. They can also see how different types of visitors interact with different pages. This helps them create content tailored to an individual audience. This increases engagement and conversions.

How to Set Up an A/B Testing Campaign

A/B Testing is a way to know which website version works best. To do this, you must make at least two versions and track how people use them. Analyze the data and check which version worked better. This article will tell you the steps for setting up A/B testing.

Establish a Hypothesis

It's critical to develop a hypothesis before starting an A/B testing campaign. This'll help you understand your goals and give structure to your campaigns.

Your hypothesis must be based on the goals of your business, website, or product. It could be increasing conversions or clicks, better user navigation and engagement, or increasing brand loyalty and trust. After you decide, make a statement about changes that could improve performance. Plus, make sure your hypothesis is measurable.

You should also separate risk-free hypotheses from high-risk, high-reward ones. An example of a Risk-free hypothesis could be considered changing the color or shape of a button. High-risk ones could be rearranging a website's navigation system. Risky hypotheses can bring more significant gains and a higher chance of failure. If unsuccessful, high-risk hypotheses can lead to substantial user engagement losses.

The type of hypothesis you choose depends on your team's experience. Experienced teams tend to go for riskier hypotheses since they're experts. Inexperienced teams usually opt for safer ones once they're more confident.

Choose Your Variations

You have your goals and hypothesis set. Now it's time to create your variations. A/B Testing is when you compare two pages. The original is called the "control" page, and the modified one is the "experimental" page.

Your experimental page should have differences from the control page to measure their effect on your goal. You need to have at least two variations of each element.

When making your variations, think about the themes you are using. Only test a few variables, or it will be hard to get results that can help you gain insights.

In A/B testing campaigns, some elements to experiment with are:

- Conversion rates: Test CTA elements like copy and design

- Site navigation: Play with menus or product categories

- User engagement: See which pages get more user interactions

- Performance metrics: Measure user experience with load times or pop-up placements

- Checkout process: Change checkout flow for faster conversions

Once you have two versions of each element, decide how long each variation will run and what metric from each website interaction is relevant. Make sure to only test one variable at a time, so data can be easily isolated and analyzed.

Set Your Goals

Before setting up an A/B testing campaign, it's essential to identify and quantify your goals. What do you want to achieve? Some common targets: boosting conversion rates, maximizing website visits, lessening bounce rates, optimizing content for better search engine rankings, or driving more engagement on social media.

After that, formally define the metrics for measuring the success of your A/B test. Will it be based on page visits? Conversion rate? Average time spent on page? It's important to recognize this in advance. Hence, you have clear criteria for evaluating the outcomes of your test, allowing informed decisions.

In addition to measurable outcomes, consider other factors like how long the test should last or how large a sample is needed for accurate results. Consider device type (mobile vs. desktop) and geographic location. These steps will set you up for success with quantifiable data from your A/B test.

Select Your Audience

A/B Testing is great for marketers and businesses. It involves running two versions of an element: a control (A) and a variant (B). When setting up an A/B test, there are some things to consider. Firstly, choose the right audience. Segment by age, gender, location, language, etc.

On your platform, decide whether to use unique identifiers or cookies. These help track how many times each variant was shown and how often it generated a conversion.

Set up different versions of the same page with minor variations. For example, headline and image changes.

Finally, decide on a campaign duration. Give enough time for responses, but not so long that customer behavior becomes hard to track.

Analyze the Results

Once you're done setting up and running your A/B test, analyze the results. Don't just check Conversion Rate - think about other success metrics such as Average Order Value or Number of Pages viewed per Session.

Before interpreting the data, make sure it's significant. An excellent way to do this is to use an A/B testing calculator. This helps you determine the sample size for reliable results.

Interpret results with qualitative and quantitative data points. Also, consider external factors, like changes in user behavior due to seasonality or events like COVID-19 or Brexit.

Predict how these external influences affect future campaigns. This helps you make decisions based on new understandings about customers and your product/service.

Conclusion

A/B Testing can optimize a website or web app. It's affordable and offers many potential results. The A/B testing tool will determine the tests that can be done.

The size and complexity of the audience affect results. Large sample sizes mean more precise results. Smaller audiences may still give valid results if monitored.

Set up and used correctly, A/B testing can provide reliable info about customer preferences. This helps better product decisions.

Frequently Asked Questions

What is A/B Testing?

A/B Testing compares two or more versions of a web page or app to determine which performs better. A/B Testing is also known as Split Testing or bucket testing. In a typical A/B test, the control version (A) is the currently used version, and the variation (B) is a modified version with changes such as different copies, images, and layouts.

What are the benefits of A/B Testing?

A/B Testing offers several benefits. It allows you to test different versions of a page or app to see which performs better. This helps you to optimize the user experience and increase conversions. A/B Testing also allows you to identify what works and what doesn't, which can inform future design and development decisions.

How long should an A/B test run?

The length of an A/B test depends on the traffic volume and the significance of the changes being tested. It's essential to run the test for long enough to ensure the results are meaningful. Generally, the more traffic you have, the shorter the test can be. If you have a low traffic, the test should run for longer.